To bring cloud-grade AI infrastructure on-premises AWS launches “AI Factories”

Deploying AI across enterprise environments is no easy task; it faces many obstacles, chief among them figuring out how to leverage each organization’s specific requirements, datasets, and legacy infrastructure. The case is easier for startups, as they can deploy new solutions more easily because they have fewer legacy systems to integrate. The story is different for established companies, which rarely have that kind of freedom.

To address this issue, Amazon Web Services (AWS) has introduced a new offering: AWS AI Factories. The new product aims to give enterprises and government agencies access to high-performance AI infrastructure directly in their own data centers, reducing the need for cloud services in some limited cases.

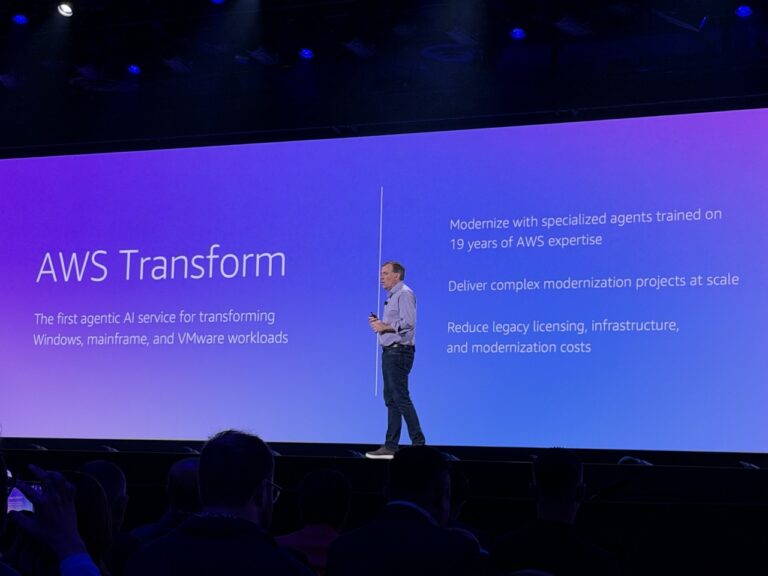

The announcement came during the recent AWS re:Invent 2025 in Las Vegas, and reflects a growing trend among major cloud providers to deliver cloud-class AI capabilities in controlled, on-premises environments. This model is seeing growing demand from organizations subject to strict data-sovereignty laws and regulatory requirements.

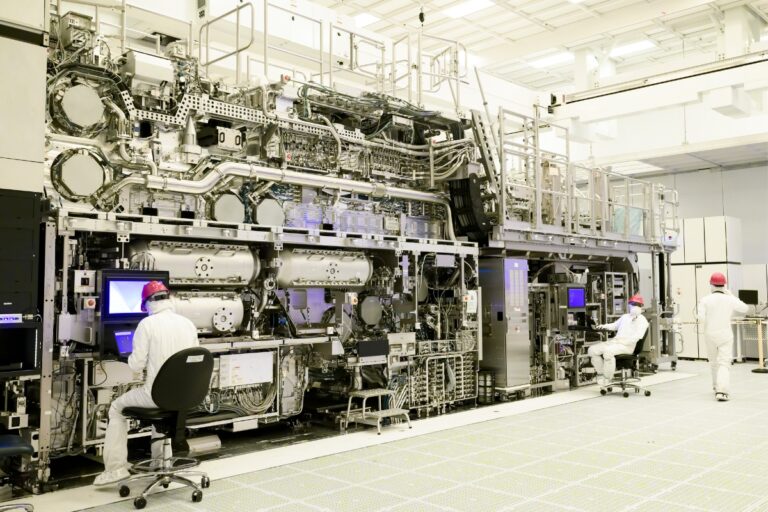

AI Factories combine AWS hardware, including Amazon Trainium accelerators, NVIDIA GPUs, specialized low-latency networking, and high-performance storage systems. The company says the setup is designed to reduce the time and complexity of building AI environments independently, an effort that often spans years due to procurement, integration, and optimization challenges.

The new service also bundles access to AWS’s AI software ecosystem, including Amazon Bedrock and SageMaker, giving customers immediate access to foundation models without negotiating separate agreements with model providers. According to AWS, the environments operate as isolated, dedicated deployments for each customer or community, offering physical and operational separation from public cloud systems while still integrating with AWS services where needed.

The move is significant for sectors such as government, healthcare, finance, and critical infrastructure, where data locality and security rules often prevent broad use of public cloud AI tools. By allowing organizations to supply only the physical space and power while AWS delivers and manages the infrastructure, the company positions AI Factories as a faster route to deploying large-scale AI and training proprietary large language models.

Industry observers see the launch as part of a broader push to meet surging demand for compute-intensive AI workloads, amid growing data privacy and sovereignty concerns. For many organizations, the challenge is not only building AI capabilities but doing so in environments that meet national or sector-specific compliance frameworks. AWS’s offering enters a landscape where similar hybrid and other on-prem AI solutions are also gaining traction.

By reducing infrastructure complexity, AWS aims to let customers focus more on developing and scaling AI applications. However, the success of AI Factories will likely depend on long-term performance, integration flexibility, and how organizations balance the benefits of cloud-aligned hardware with the control of on-prem deployment.