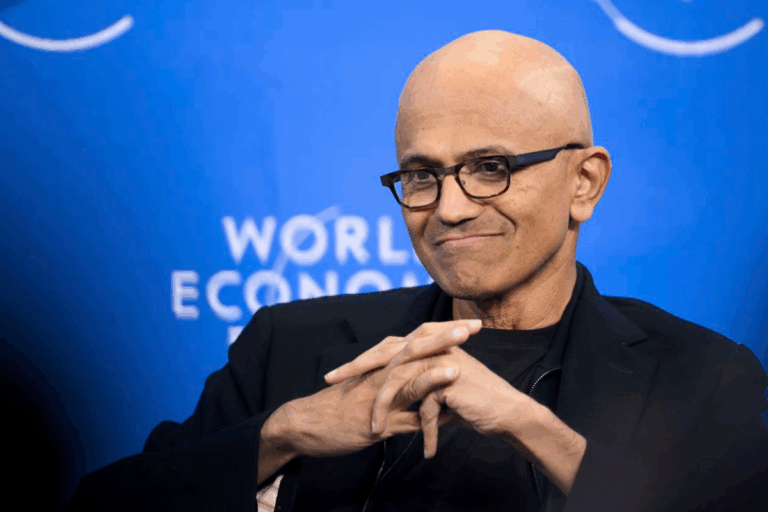

Power, not chips, is the biggest obstacle for AI, says Microsoft chief

In a recent joint interview with OpenAI’s CEO, Sam Altman, Satya Nadella, CEO of Microsoft, shifted the dialogue about AI development considerably when he claimed that energy availability has emerged as the central challenge in building AI infrastructure, surpassing hardware supply.

The primary obstacle to scaling large AI systems today is not a shortage of GPUs but a shortage of electricity to power them. The biggest issue we are now having is not a compute glut, but it’s power,” Nadella said. “It’s sort of the ability to get the builds done fast enough close to power.”

Microsoft’s chief further explained that his company has encountered scenarios where its hardware inventory exceeds what its data centers can operate, identifying electricity as the missing component.

The increasing energy demands of AI are now impacting every part of the digital ecosystem. Modern AI-focused data centers can use as much electricity as small cities. Some hyperscale facilities being built are expected to need up to 20 times more power than current ones, with individual campuses projected to draw as much as 2 gigawatts — equivalent to 70% of Lebanon’s total power capacity, for example.

To meet these demands, data center operators are rushing to secure so-called “warm shells”—facilities already equipped with power and cooling infrastructure. Because compute hardware cannot go online until these utilities are in place, cloud providers and AI firms often find themselves waiting months to deploy GPUs, leaving servers idle while local power constraints are resolved.

Nadella candidly explained the issue: “You may actually have a bunch of chips sitting in inventory that I can’t plug in. In fact, that is my problem today. It’s not a supply issue of chips; it’s actually the fact that I don’t have warm shells to plug into.”

Since the global GPU shortage eased, industrial-scale AI operations have contributed to noticeable increases in electricity costs in many places. The U.S. is one of the most affected countries, with some states experiencing residential power bills that are up to 36% higher.

The U.S. is one of the biggest electricity producers in the world, second only to China. Yet, the energy crunch means the production is not growing as fast as needed. Recent reports cited the U.S. energy crunch as a big reason for Microsoft’s recent decision to ship tens of thousands of AI chips to be deployed in the UAE, where the company is investing $15.2 billion in AI.

AI’s enormous power draw is also intensifying water use. Many data centers rely on water-based or liquid-cooling systems to maintain GPU performance, which creates challenges in drought-prone regions. Some companies have been compelled to relocate their operations to cooler climates to reduce both environmental impact and costs.

As competition for energy increases, major technology companies are directly negotiating with utilities to gain priority access to electricity, sometimes leading to overlapping projects that strain local grids.

Meanwhile, speculation is increasing that future consumer hardware could run advanced AI models like GPT-5 or GPT-6 locally at much lower power levels. Nadella and Altman indicated that as semiconductor technology advances, these low-power devices might lessen reliance on large centralized data centers. That possibility is already affecting investor sentiment, prompting questions about whether today’s multi-billion-dollar infrastructure investments will stay viable as AI computation moves closer to the edge.