New YouTube feature lets you fight back against AI deepfakes

For years, concerns have been raised about the rise of deepfakes. In the beginning, it took hours of footage and audio to produce a somewhat convincing deepfake, which often didn’t hold up under scrutiny. Today, all you need is a smartphone and an app like OpenAI’s Sora to create a convincing deepfake of nearly anyone. As a result, the internet has been flooded with deepfakes, and YouTube is apparently working to address the fallout.

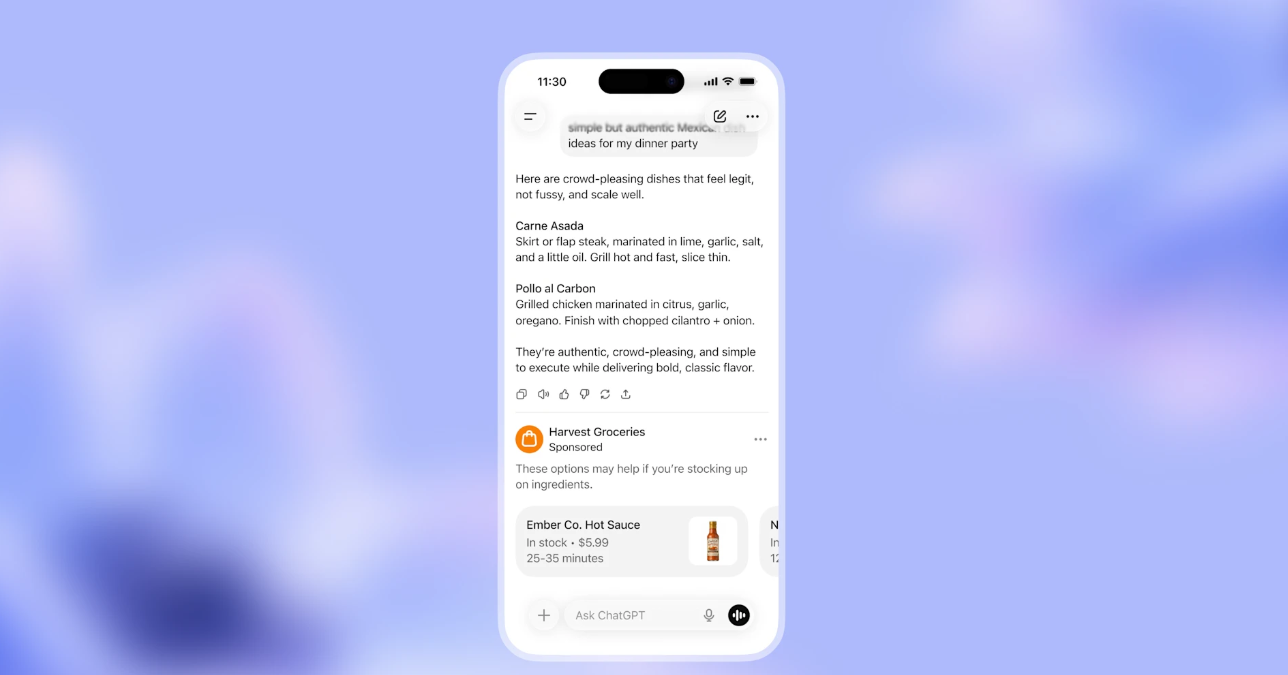

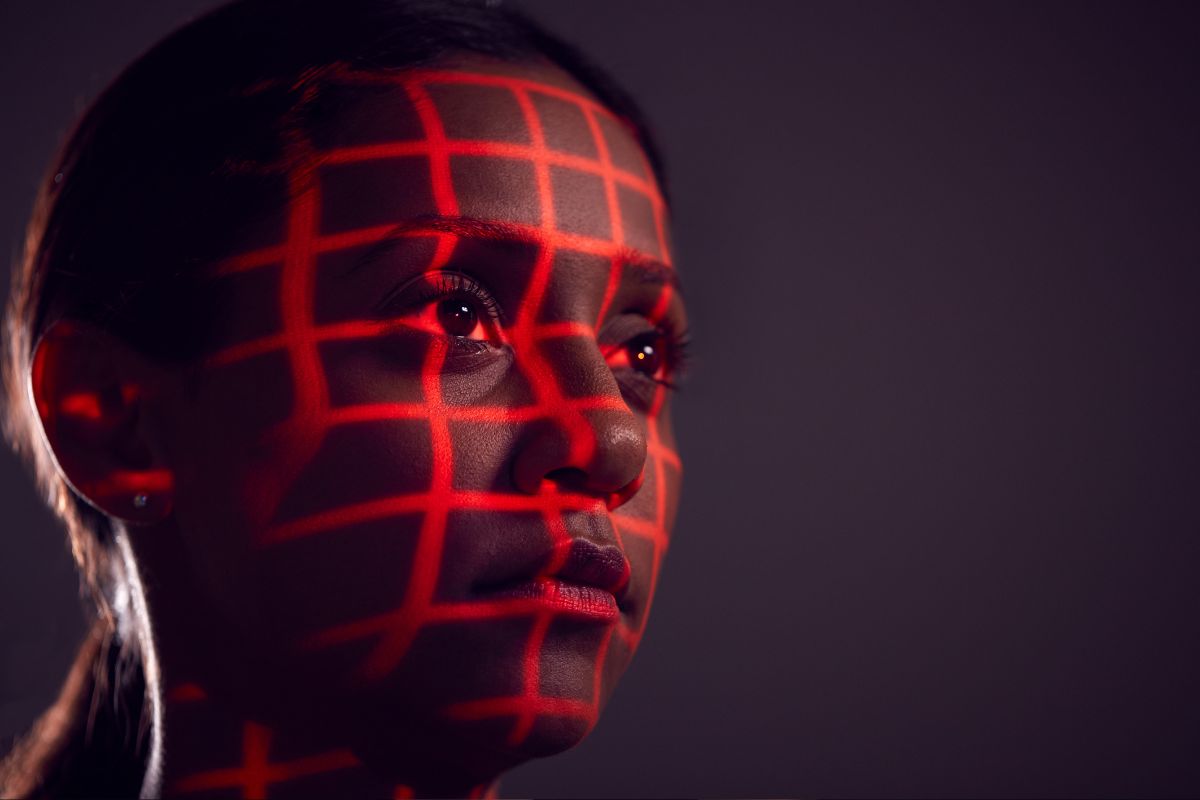

A few days ago, YouTube announced that creators enrolled in its Partner Program will gain access to a new AI detection tool that automatically identifies and reports unauthorized videos using their likeness. As shown, the tool lets creators review flagged videos in the Content Detection tab in YouTube Studio after verifying their identity. If any results appear to feature AI-generated reproductions, creators can submit a formal takedown request.

The first group of eligible creators received email notifications earlier, with a wider rollout planned over the coming months. However, YouTube warned early adopters that false positives might be common, as the feature is still in development. This means the results could include legitimate uploads of the creator’s own content, which limits the tool’s effectiveness.

The new system functions similarly to YouTube’s existing Content ID tool, which detects copyrighted audio and video and lets the original owners submit takedown notices or copyright strikes. This similarity may be concerning, as the current Content ID tool is known to be misused by bad actors, resulting in many creators suffering.

YouTube initially introduced this feature last year and began testing it in December through a pilot program. In its announcement, YouTube explained that “through this collaboration, several of the world’s most influential figures will gain early access to technology designed to detect and manage AI-generated videos that replicate their likeness — including their facial features — at scale on the platform.”

Even though most people view the new step positively, YouTube is actually involved in the current deepfake problem. The platform and its parent company, Google, are part of an expanding list of tech companies developing AI-powered video creation and editing tools. While these tools are not intended for making deepfakes or harmful content, in practice, oversight is weak, and safety measures are almost absent.

The likeness detection feature is part of several efforts to handle AI-created content on YouTube. Earlier this year, the platform started requiring creators to disclose when their uploads contain AI-generated or altered material, and it implemented a strict policy against AI-generated music that copies an artist’s unique voice or performance style.