China drafts rules for human-like AI chat services

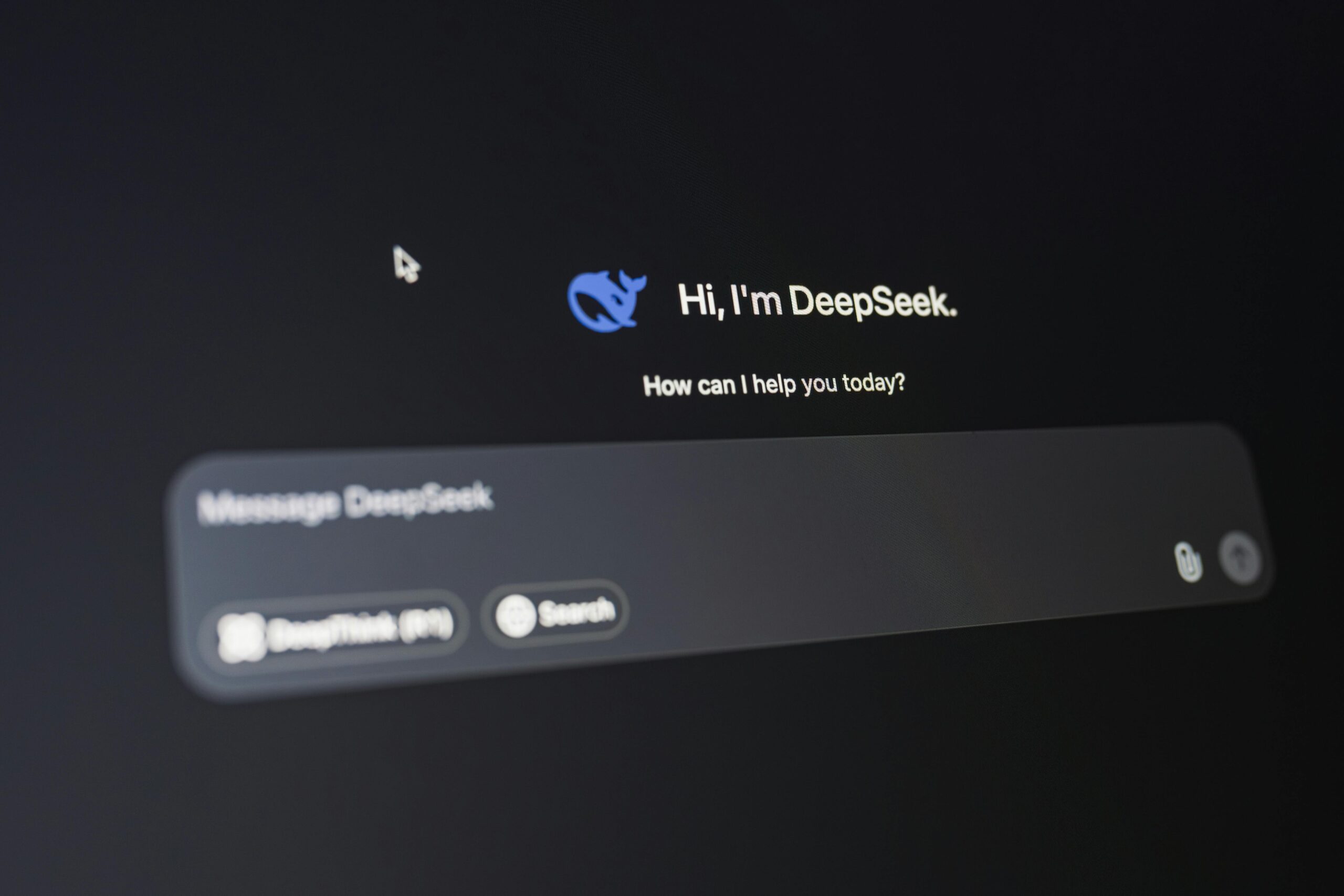

China’s Cyberspace Administration of China (CAC) issued draft rules for public comment that would tighten oversight of artificial intelligence services designed to mimic human personalities and engage users in emotional interaction.

The proposal targets consumer-facing AI products and services offered to the public in China that present simulated human personality traits, thinking patterns, and communication styles. It would cover emotional interaction delivered through text, images, audio, video, or other formats.

Under the draft, providers would be required to display reminders warning users against excessive use and to step in when users show signs of addiction. The rules also outline responsibilities across the product lifecycle, including requirements for algorithm review, data security, and personal information protection.

The draft also addresses potential psychological risks. Providers would be expected to identify certain user states, assess users’ emotions and dependence on the service, and take “necessary measures” if users show extreme emotions or addictive behavior, the proposal says.

In addition, the draft sets content and conduct restrictions, stating that services should not generate content that endangers national security, spreads rumors, or promotes violence or obscenity.

China has introduced multiple regulatory frameworks in recent years for algorithm-driven and generative AI services, as authorities seek to set safety and governance requirements alongside rapid consumer adoption.