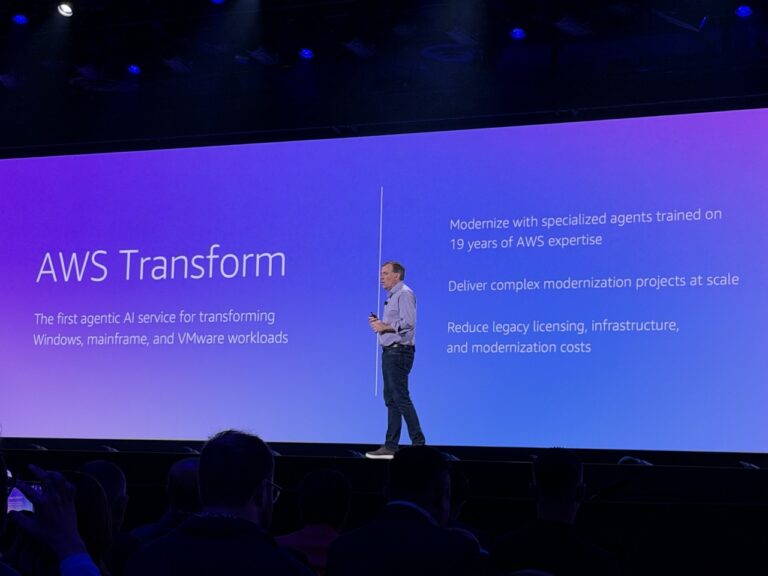

A single point of failure triggered the recent AWS outage, company confirms

Two weeks earlier, an outage at Amazon Web Services (AWS) disrupted dozens of major online platforms worldwide, temporarily taking down services like Amazon, Alexa, Ring, Snapchat, Reddit, Fortnite, ChatGPT, and the Epic Games Store. The incident lasted about two-thirds of a day, spreading globally before AWS restored operations after addressing what it called a series of “cascading” system failures.

According to Amazon, the disruption started at 11:48 PM PDT on October 19 (10:48 AM on October 20 in the UAE), when users started experiencing widespread latency and connection errors in the US-EAST-1 region (Northern Virginia). The company identified the problem as an issue with DNS resolution on the “DynamoDB API” endpoint.

Engineers repaired the failure in about 11 hours, but it took roughly 16 hours for all impacted services to fully recover, causing outages across different time zones and countries.

This type of outage is not unusual among large cloud providers. However, its scope and reach raised many tough questions about how a disruption at this level could still happen in a supposedly distributed network.

According to Ars Technica, the outage was caused by a software bug in a DNS management component used by DynamoDB, one of AWS’s main database systems. The flaw caused a race condition—a timing conflict between overlapping processes—that allowed an outdated DNS plan to overwrite a newer one, erasing IP addresses for a critical regional endpoint.

That single error caused DynamoDB to stop, which then led to many dependent services malfunctioning as well. This resulted in widespread disruptions across services that relied on it for data access, authentication, or routing.

Data from Ookla’s Downdetector revealed the scale of the disruption. The platform documented over 17 million user reports from 60 countries. The most affected included Snapchat, Roblox, Reddit, and Amazon’s retail and Ring services. However, the outage also disrupted other services, such as government websites, financial institutions, and educational tools, which faced outages due to the same regional failure.

The US-EAST-1 is Amazon’s oldest and most heavily used data center region, serving as the backbone of AWS’s cloud infrastructure. While the region’s name might be associated with a specific part of the United States, many global services still depend on it. This clearly introduces a weak point for the internet, which was originally envisioned as decentralized.

After fixing the original error, Amazon quickly addressed the underlying vulnerability. The company soon suspended DynamoDB DNS automation globally and outlined its plans to redesign the flawed system to prevent similar race conditions.

Although limited in timeframe and impact, this outage serves as a stark reminder of the importance of diversifying infrastructure. AWS’s data center infrastructure is among the most geographically distributed in the world. However, the outage revealed that physical diversity alone is insufficient; software redundancies are essential, as exemplified by the 2024 CrowdStrike outage, which shook the world and was caused by a single bad update to security software.

This event further reinforces the need for sovereign clouds, which have become an essential part of the recent digital transformation efforts in the UAE and Saudi Arabia. Regional governments have long championed the idea of the sovereign cloud, and the outage underscored the importance of that initiative, as critical services in both countries experienced little to no disruption from the blackout, thanks to most of them being independently hosted and operated from local servers.

When the precursors of the internet began in the 1970s, nearly all connected devices were located in a few research centers and universities. Despite its small size, the global network was relatively diverse at that time. Contrary to what you might expect, the growth of users didn’t increase the diversity of the internet; instead, it had the opposite effect, prompting industry consolidation. Soon, local servers hosted by individuals or companies were replaced with more centralized solutions. Today, this centralization calls for a reconsideration, both by sovereign governments and service providers.

Since online services are no longer considered a luxury, especially in the business sector, disruptions should be regarded as seriously as issues with electricity or other critical infrastructure. Therefore, experts recommend that providers adopt multi-region architectures, diversify their dependencies, and conduct realistic disaster simulations to better prevent cascading failures. Reports indicate that AWS is already implementing these strategies, but only time will reveal how effective they will be.