Making data move faster with S3 Vectors, AWS’s Andy Warfield on RAG and AI agents

Across the cloud industry, AI is changing what customers expect from data platforms. The shift is no longer only about storing more information, but about making that information easier to search by meaning, connect to AI systems instantly, and use at scale without adding unnecessary complexity.

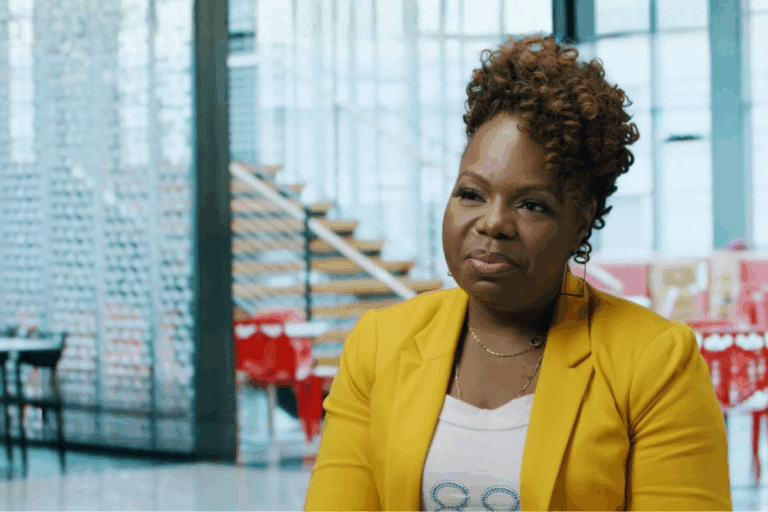

At AWS re:Invent 2025, that direction has been apparent in the data conversation. For Andy Warfield, VP and distinguished engineer at Amazon Web Services, working across storage, data, and analytics, the priority is helping customers move faster and get more value from the data they already have. From his perspective, one of the most important announcements tied to that goal is S3 Vectors.

In this exclusive interview, Warfield explains how S3 Vectors have become central to modern AI workflows, why AWS is building this capability into Amazon S3, and how use cases are expanding from RAG (Retrieval-Augmented Generation) and video understanding to enterprise anomaly detection.

What is AWS emphasizing at re:Invent this year in your area?

In AWS, I spend most of my time on data, and one of the biggest themes of re:Invent 2025 is moving faster and getting more value from the data you work with. The biggest announcements we have center around that ability.

What’s driving this is the shift in how customers want to use data today. It’s not enough for data to be stored cheaply or safely. Teams want to activate it for AI, analytics, and real-time decision-making without needing to build complex new stacks.

One specific launch I want to highlight is S3 Vectors. It’s a vector capability built into Amazon S3. The idea is to make vector storage feel like a natural extension of the S3 experience, so customers can enable semantic search and AI-driven retrieval without moving their data into a separate, specialized system.

What does vector search mean in practical terms?

There’s a remarkable area of computer science that has advanced significantly over the last decade. As we built large-scale machine learning models, we found they had an extra application. They could take complex data like images, video, and text and summarize meaning and relationships in a form that the model can handle.

Embedding models allow you to summarize that content into what’s called a vector. It’s a mathematical construct made of a large list of numbers. In a high-dimensional space, related things sit closer together. This is valuable when you need to find the right information without knowing the exact search term to use.

Why bring vectors into S3 instead of relying on separate systems?

Before S3 Vectors, there were robust systems for vector search. But by treating it as an S3 problem, we’re moving it from an expensive memory-based database approach to a more cost-effective storage-based construct.

The goal is to enable volumeindexing large amounts of data and retrieving the right information in a scalable, practical way.

How does this help consumers and everyday use cases?

It’s for both consumers and businesses.

A simple consumer-facing example is retrieval-augmented generation (RAG). You might have a large foundation model trained on enormous amounts of data, but not on your organization’s private data or your email. With RAG, you issue a vector search against a separate dataset, pull the relevant information into the prompt, and now the model can analyze that data as well.

Another example is video. Strong embedding models can index video at the shot or scene level. That makes editing and content assembly much more automatable. We’re seeing this create opportunities in broadcast sports for building highlight reels quickly and in home videos, where people can generate meaningful montages more easily.

An exciting example for enterprises is anomaly detection. When customers embed events, logs, or financial data into a vector space, they can use distance to spot unusual patterns. That signal can support use cases such as fraud detection by comparing an item’s behavior to known valid or fraudulent patterns.

With data volumes expanding so rapidly, how does AWS continue to scale?

The scale of AWS storage operations is still remarkable to me, even after years of working with these systems. The reality is that the core challenge hasn’t changed. We solve problems, the system grows, and then we face the next wave of scale constraints. We handle this through discipline, a strong operational focus, and continued engineering creativity to maintain performance and resilience as demand accelerates.

How has your academic background influenced your leadership style?

While at the university, I taught undergraduates and supervised graduate students conducting research. One thing I learned was that you can’t get people to do good work just by telling them to do it.

You can get the best outcomes by finding what you’re both interested in and being enthusiastic about it. That empathy for what excites people about their work is something I carried into how I operate in a senior role.

What advice would you give to engineers who want to join AWS?

I would give two pieces of advice. First, become fluent in these emerging AI-based tools for development and other workflows. Learn what’s possible and how it may change the workforce.

Second, don’t use these tools to avoid the details. If they become an excuse to be checked out or less aware of what you’re working on, you’re doing yourself a disservice. The benefit comes when these tools reduce friction and allow you to focus on deeper technical work while improving your understanding of systems.

How does AWS sustain innovation at this scale?

We make it part of everything we do. Operationally, we stay in the details. We review what’s happening weekly, respond to escalations, and, when something surprises us, invest the effort needed to improve the systems.

On the development side, when we’re at our best, engineers know what they want to build for customers and speak directly with them. That closeness keeps innovation focused and fast.

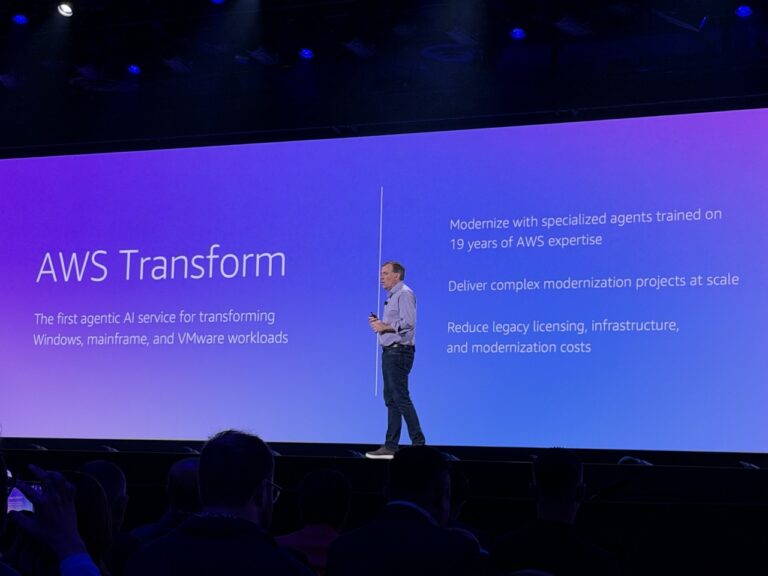

How is the cloud experience evolving for builders today?

The service area continues to expand, and a few years ago, that could have felt frustrating. But with agent-based development environments, the ability to explore a large tool surface conversationally is becoming a strength.

Builders can try different approaches and choose the right tool for the job more easily. That shift turns what used to be a risk of complexity into an opportunity.

Any final thoughts on re:Invent this year?

I’m more excited about re:Invent this year than I’ve been in ages. In the past six months, I’ve written more code than I had in the previous few years combined. It’s a remarkable time, and I’m excited to see what happens in the next 12 months.